반응형

-- 본 포스팅은 파이토치로 배우는 자연어 처리 (한빛미디어) 책을 참고해서 작성된 글입니다.

-- 소스코드는 여기

1. 임베딩(Embedding) 이란?

- 이산 타입의 Word 데이터를 밀집 벡터 표현으로 바꿔주는 방법을 단어 임베딩이라고 함

- 이산타입?

문자, 개체명, 어휘사전 등 유한한 집합에서 얻은 모든 입력 특성을 이산타입이라고 한다.

- 이산타입?

- 자연어를 기계가 이해할 수 있는 숫자형태인 벡터로 바꾼 결과 혹은 그 일련의 과정 전체를 임베딩이라고 함

- 대표적으로 원핫인코딩, TF-IDF 방법이 있다.

2. 임베딩 기법의 흐름와 종류

- 점차 통계기반의 기법에서 Neural Network 기법으로

- 통계기반의 기법

- 잠재의미분석 : 단어의 사용 빈도 등 Corpus의 통계량 정보가 들어있는 행렬에 특이값 분해 등 수학적 기법을 사용하요 행렬에 속한 벡터들의 차원을 축소하는 방법 (TF-IDF, Word_Context Matrix, PMI Matrix 등이 있다.)

- Neural Network 기법

- Nerual Probabilistic Language Model이 발표된 이후부터 주목받기 시작

- 구조가 유연하고 표현력이 풍부하기 때문에 자연어의 무한한 문맥을 상당 부분 학습할 수 있어서 주목받는다.

- 통계기반의 기법

- 단어수준에서 문장수준의 임베딩 기법을 사용한다.

- 단어수준 임베딩 기법

- 각각 벡터에 해당 단어의 문맥적 의미를 함축핮만, 단어가 동일하면 동일한 단어로 인식하고, 모든 문맥의 정보를 이해할수 없다. (동음이의어를 구벼하기 어렵다.)

- NPLM, Word2Vec, GloVe, FastText 등

- 문장수준 임베딩 기법

- ELMo가 발표된 이후 주목받기 시작

- 개별단어가 아닌 Sequence 전체의 문맥적 의미를 함축하기 때문에 단어 임베딩 기법보다 Transfer Learning 효과가 좋다.

- 동음이의어도 문장수준 임베딩 기법을 활용하면 분리해서 이해할 수 있어서 요즘 주목받는다. (문맥파악 용이)

- BERT, GPT 등

- 단어수준 임베딩 기법

- Rule Based 에서 End to End로, 그리고 최근에는 Pre-Training / fine Tuning 으로

- 1990년대 자연어 처리 모델의 초기 모습은 피쳐를 사람이 뽑아서 사용

- 2000년대에는 피쳐를 자동으로 추출해주고, 모델에 데이터를 넣으면 데이터를 처음부터 끝까지 기계가 이해하는 end to end 기법을 사용 (대표적으로 Sequence to Sequence 모델이 있다)

- 2018년 ELMo 모델이 제안된 이후 NLP모델은 pre-training 과 fine tuning 방식으로 발전하고 있다

3. 임베딩의 종류

- 행렬분해 기반 방법

- Corpus가 들어있는 원래 행렬을 Decomposition을 통해 임베딩 하는 방법

- Decomposition 이후에는 둘 중 하나의 행렬만 사용하거나 둘을 sum하거나 concatenate 하는 방식으로 임베딩을 진행한다

- GloVe, Sweivet 등

- 예측 기반 방법

- 어떤 단어 주변에 특정 단어가 등장할지 예측하거나

- 이전 단어들이 주어졌을 때 다음 단어가 무엇일지 예측하거나

- 문장 내 일부 단어를 지우고 해당 단어가 무엇일지 맞추는 과정을 학습시키는 방법

- Neural Network 기반 방법들이 여기에 속한다.

- Word2Vec, FastText, BERT, ELMo, GPT 등

- 토픽 기반 방법

- 주어진 문서에 잠재된 주제를 추론하는 방식으로 임베딩을 수행하는 기법

- LDA기법이 여기에 속함

- 학습이 온료되면 각문서가 어떤 주제의 분포를 갖는지 확률 벡터 형태로 변환하기 때문에 임베딩 기법의 일종으로 이해하면 된다.

4. 단어 임베딩 학습 방법

단어 임베딩은 레이블이 없는 데이터로 학습된다. 하지만 지도학습방법을 사용한다. 이게 무슨말인가 알아보면, 아래와 같다.

- 데이터가 암묵적으로 레이블 되어 있는 보조 작업을 구성한다.

- 단어 시퀀스가 주어지면 다음 단어를 예측하는 보조작업 (언어 모델링 작업 수행)

- 앞과 뒤의 단어 시퀀스가 주어지면 누락된 단어를 예측하는 보조작업

- 단어가 주어지면 위치에 관계 없이 window안에 등장할 단어를 예측하는 보조작업

- 보조작업의 선택은 알고리즘 설계자의 직관과 계산 비용에 따라 달라진다.

- 보조작업의 예)

GloVe, CBOW, skip-gram 등

- 보조작업의 예)

5. 사전 훈련된 단어 임베딩 (pre-trained word embedding)

- 구글, 위키피디아 등에서 대규모 말뭉치와 사전 훈련된 단어 임베딩을 다운로드해서 사용 가능

- 단어 임베딩의 몇가지 속성과 NLP 작업에 pre-trained word embedding 을 사용하는 예시를 알아보자.

5.1 임베딩 로드

임베딩을 효율적으로 로드하고 처리하는 PreTrainedEmbeddings 유틸리티 클래스를 살펴보자. (해당 코드는 이 링크에서 확인할 수있다) 이 클래스는 빠른 조회를 위해 메모리 내에 모든 단어의 인덱스를 구축하고 K-Nearest Neighbor 알고리즘을 구현한 annoy 패키지를 사용한다.

5.1.1 패키지 설치 및 불러오기

# annoy 패키지를 설치합니다.

!pip install annoy

import torch

import torch.nn as nn

from tqdm import tqdm

from annoy import AnnoyIndex

import numpy as np

5.1.2 class 살펴보기

- __init__

- word_to_index : 단어에서 정수로 매핑

- word_vectors : 벡터의 배열 리스트

- 인덱스를 만들어주는 PreTrainedEmbeddings 클래스의 생성자

- from_embeddings_file(cls, embedding_file)

- 파일을 불러올때 해당 메서드를 사용한다

- 사전 훈련된 벡터 파일로부터 객체를 만든다.

- 반환값은 PretrainedEmbedding 의 인스턴스

- get_embedding(self, word)

- 임베딩된 리스트를 반환값으로 받는다.

- numpy.ndarray의 형태의 반환값

- get_closet_to_vector(self, vector, n=1)

- 벡터가 주어지면 n개의 최근접 이웃을 반환

- [str, str...] 형태로 반환된다.

- compute_and_print_analogy(self, word1, word2, word3)

- print("{} : {} :: {} : {}".format(word1, word2, word3, word4)) 형태로 단어의 유추 결과를 반환해준다.

class PreTrainedEmbeddings(object):

""" 사전 훈련된 단어 벡터 사용을 위한 래퍼 클래스 """

def __init__(self, word_to_index, word_vectors):

"""

매개변수:

word_to_index (dict): 단어에서 정수로 매핑

word_vectors (numpy 배열의 리스트)

"""

self.word_to_index = word_to_index

self.word_vectors = word_vectors

self.index_to_word = {v: k for k, v in self.word_to_index.items()}

self.index = AnnoyIndex(len(word_vectors[0]), metric='euclidean')

print("인덱스 만드는 중!")

for _, i in self.word_to_index.items():

self.index.add_item(i, self.word_vectors[i])

self.index.build(50)

print("완료!")

@classmethod

def from_embeddings_file(cls, embedding_file):

#파일을 불러올때 해당 메서드 사용

# embeding = PreTrainedEmbeddings.from_embeddings_file(파일이름) 이렇게 임베딩해줌

"""사전 훈련된 벡터 파일에서 객체를 만듭니다.

벡터 파일은 다음과 같은 포맷입니다:

word0 x0_0 x0_1 x0_2 x0_3 ... x0_N

word1 x1_0 x1_1 x1_2 x1_3 ... x1_N

매개변수:

embedding_file (str): 파일 위치

반환값:

PretrainedEmbeddings의 인스턴스

"""

word_to_index = {}

word_vectors = []

with open(embedding_file) as fp:

for line in fp.readlines():

line = line.split(" ")

word = line[0]

vec = np.array([float(x) for x in line[1:]])

word_to_index[word] = len(word_to_index)

word_vectors.append(vec)

return cls(word_to_index, word_vectors)

def get_embedding(self, word):

"""

매개변수:

word (str)

반환값

임베딩 (numpy.ndarray)

"""

return self.word_vectors[self.word_to_index[word]]

def get_closest_to_vector(self, vector, n=1):

"""벡터가 주어지면 n 개의 최근접 이웃을 반환합니다

매개변수:

vector (np.ndarray): Annoy 인덱스에 있는 벡터의 크기와 같아야 합니다

n (int): 반환될 이웃의 개수

반환값:

[str, str, ...]: 주어진 벡터와 가장 가까운 단어

단어는 거리순으로 정렬되어 있지 않습니다.

"""

nn_indices = self.index.get_nns_by_vector(vector, n)

return [self.index_to_word[neighbor] for neighbor in nn_indices]

def compute_and_print_analogy(self, word1, word2, word3):

"""단어 임베딩을 사용한 유추 결과를 출력합니다

word1이 word2일 때 word3은 __입니다.

이 메서드는 word1 : word2 :: word3 : word4를 출력합니다

매개변수:

word1 (str)

word2 (str)

word3 (str)

"""

# get_embedding 메서드를 사용해서 벡터값을 받는다

vec1 = self.get_embedding(word1)

vec2 = self.get_embedding(word2)

vec3 = self.get_embedding(word3)

# 네 번째 단어 임베딩을 계산합니다

spatial_relationship = vec2 - vec1

vec4 = vec3 + spatial_relationship

# get_closet_to_vector 메서드를 사용해서 최근접 이웃에 있는 word들을 받는다. (n = 4개)

closest_words = self.get_closest_to_vector(vec4, n=4)

existing_words = set([word1, word2, word3])

closest_words = [word for word in closest_words

if word not in existing_words]

if len(closest_words) == 0:

print("계산된 벡터와 가장 가까운 이웃을 찾을 수 없습니다!")

return

for word4 in closest_words:

print("{} : {} :: {} : {}".format(word1, word2, word3, word4))

5.1.3 GloVe 데이터를 다운로드

# GloVe 데이터를 다운로드합니다.

!wget http://nlp.stanford.edu/data/glove.6B.zip

!unzip glove.6B.zip

!mkdir -p data/glove

!mv glove.6B.100d.txt data/glove

5.1.4 임베딩 생성

embeddings = PreTrainedEmbeddings.from_embeddings_file('data/glove/glove.6B.100d.txt')

5.1.5 결과 확인해보기

- 성별 명사와 대명사의 관계

-

embeddings.compute_and_print_analogy('man', 'he', 'woman')

-

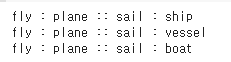

- 동사 - 명사 관계

-

embeddings.compute_and_print_analogy('fly', 'plane', 'sail')

-

- 명사 - 명사 관계

-

embeddings.compute_and_print_analogy('cat', 'kitten', 'dog')

-

- 상위어 (더 넓은 범주)

-

embeddings.compute_and_print_analogy('blue', 'color', 'dog')

-

- 부분에서 전체 개념의 관계

-

embeddings.compute_and_print_analogy('leg', 'legs', 'hand')

-

- 방식 차이

-

embeddings.compute_and_print_analogy('talk', 'communicate', 'read')

-

- 전체 의미 표현

-

embeddings.compute_and_print_analogy('blue', 'democrat', 'red')

-

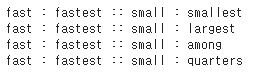

5.1.6 임베딩의 문제

단어 벡터는 동시에 등장하는 정보를 기반으로 하므로, 잘못된 관계가 생성되기도 한다.

- 잘못된 정보를 내뱉는 경우. (동시에 등장하는 정보를 기반으로 인코딩하기 때문에 이런 오류는 당연하다.)

-

embeddings.compute_and_print_analogy('fast', 'fastest', 'small')

▶ smallest가 나와야 하지만, largest among 등 다른단어가 등장했다.

-

- 널리 알려진 단어 임베딩의 쌓인 성별 인코딩 (이렇게 성별과 같은 보호 속성에 주의해야한다. 왜냐하면 추후 하위 모델에서 원치 않는 편향을 발생시킬 수 도 있기 때문이다.)

-

embiddeings.compute_and_pring_analogy('man', 'king', 'woman')

-

- 떄로는 벡터에 인코딩된 문화적 성별 편견을 발생시키기도 한다.

-

embeddings.compute_and_print_analogy('man', 'doctor', 'woman')

▶ 남자는 의사, 여자는 간호사라는 문화적 성별 편견을 발생시켰다.

-

지금까지 임베딩에 관한 간단한 속성과 특징을 살펴보았다. 다음글에서는 CBOW 임베딩을 학습시키는 예제를 살펴볼 것이다.

참고문헌 ) https://heung-bae-lee.github.io/2020/01/16/NLP_01/

임베딩이란?

컴퓨터가 바라보는 문자 아래와 같이 문자는 컴퓨터가 해석할 때 그냥 기호일 뿐이다. 이렇게 encoding된 상태로 보게 되면 아래와 같은 문제점이 발생할 수 있다. 이 글자가 어떤 글자인지를 표시

heung-bae-lee.github.io

반응형

'AI study > 자연어 처리 (NLP)' 카테고리의 다른 글

| [NLP] Pytorch를 활용하여 CBOW 임베딩 학습하기 (2)모델 훈련 (0) | 2021.07.29 |

|---|---|

| [NLP] Pytorch를 활용하여 CBOW 임베딩 학습하기 (1)데이터셋 생성 (0) | 2021.07.29 |

| [NLP] MLP로 성씨 분류하기 (1) (feat.파이토치로 배우는 자연어처리) (0) | 2021.07.23 |

| [NLP] 레스토랑 리뷰 감성 분류하기 (3) (feat.파이토치로 배우는 자연어 처리) - 훈련 및 평가, 추론, 분석 (2) | 2021.07.22 |

| [NLP] 레스토랑 리뷰 감성 분류하기 (2) (feat.파이토치로 배우는 자연어 처리) - 데이터 처리를 위한 클래스 살펴보기 (0) | 2021.07.22 |