-- 본 포스팅은 파이토치로 배우는 자연어 처리 (한빛미디어) 책을 참고해서 작성된 글입니다.

-- 소스코드 ) https://github.com/rickiepark/nlp-with-pytorch

GitHub - rickiepark/nlp-with-pytorch: <파이토치로 배우는 자연어 처리>(한빛미디어, 2021)의 소스 코드를

<파이토치로 배우는 자연어 처리>(한빛미디어, 2021)의 소스 코드를 위한 저장소입니다. - GitHub - rickiepark/nlp-with-pytorch: <파이토치로 배우는 자연어 처리>(한빛미디어, 2021)의 소스 코드를 위한 저장

github.com

1. 성씨 데이터셋

- 18개 국적의 성씨 10,000개를 모은 성씨 데이터셋

- 매울 불균형함

- 최상위 클래스 3개가 데이터의 60%를 차지

- 27%가 영어, 21%가 러시아어, 14%가 아랍어

- 나머지 15개국의 빈도는 계속 감소

- 언어 자체으 속성이기도 함 (많이 사용하는 언어일수록 많을 수 밖에)

- 출신국가와 성씨 맞춤법 사이에 의미가 있고 직관적인 관계가 있음

- 즉, 국적과 관계가 있는 성씨가 존재

2. 데이터 전처리

아래와 같이 처리한 데이터를 사용했다. 해당 코드에서는 아래 방식을 거쳐져서 미리 처리된 데이터를 사용한다. 데이터를 나누는 코드는 아래

- 불균형 줄이기

- 원본 데이터셋은 70% 이상이 러시아 이름

- 샘플링이 편향되었거나 러시아에 고유한 성씨가 많기 때문으로 추정

- 러시아 성씨의 부분집합을 랜덤하게 선택하여 편중된 클래스를 "서브샘플링" 해줌

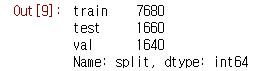

- 국적을 기반으로 모아 3세트로 split

- train = 70%

- validation = 15%

- test = 15%

- 세트간 레이블 분포를 고르게 유지 시킴

▶ 간단하게 데이터 살펴보기

# Read raw data

surnames = pd.read_csv(args.raw_dataset_csv, header=0)

surnames.head()

- surnames 데이터셋의 형태는 이렇게 생겼다.

# Unique classes

set(surnames.nationality)

- 18개의 국가는 위와 같다.

# Write split data to file

final_surnames = pd.DataFrame(final_list)

final_surnames.split.value_counts()

- 데이터의 분포는 70: 15: 15 로 나누어져 있는 것을 확인할 수 있다.

3. import

필요한 라이브러리를 모두 임포트 해주었다.

from argparse import Namespace

from collections import Counter

import json

import os

import string

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

import tqdm4. Custom Dataset 만들기 (SurnameDataset)

- 파이토치의 Dataset 상속

- Dataset이 구조를 따름

- __len__ 메서드 : 데이터셋의 길이를 반환한다.

- __getitem__ 메서드 : 벡터로 바꾼 성씨와 국적에 해당하는 인덱스를 반환한다.

▶ SurnameDataset 의 class 설명

class 코드가 너무 길어서 이해하기 위해 하나씩 뜯어서 설명할 예정이다. 마지막에 <더보기>를 클릭하면 class로 이어진 전체 코드를 볼 수 있다.

1. __init__ 메서드

def __init__(self, surname_df, vectorizer):

"""

매개변수:

surname_df (pandas.DataFrame): 데이터셋

vectorizer (SurnameVectorizer): SurnameVectorizer 객체

"""

self.surname_df = surname_df

self._vectorizer = vectorizer

self.train_df = self.surname_df[self.surname_df.split=='train']

self.train_size = len(self.train_df)

self.val_df = self.surname_df[self.surname_df.split=='val']

self.validation_size = len(self.val_df)

self.test_df = self.surname_df[self.surname_df.split=='test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

# 클래스 가중치 구하기

# surnaem_df의 nationality 갯수를 dict 형태로 넣어줌

class_counts = surname_df.nationality.value_counts().to_dict()

def sort_key(item):

# _vectorizer = SurnameVectorizer 객체를 의미함

# nationality_vocab = 국적을 정수에 매핑하는 Vocabulary 객체 (class SurnameVectorizer)

# look_up_token = 토큰에 대응하는 인덱스를 추출하는 메서드 (class vocabulary)

return self._vectorizer.nationality_vocab.lookup_token(item[0])

# 인덱스를 key로 지정해서 dic에서 쌍으로 꺼내와서 정렬

sorted_counts = sorted(class_counts.items(), key=sort_key)

# 빈도수를 기준으로 클래스의 가중치 구하기

frequencies = [count for _, count in sorted_counts]

self.class_weights = 1.0 / torch.tensor(frequencies, dtype=torch.float32)- 생성자 메서드

- 매개변수로 surname_df (데이터셋)과 vectorizer(SurnameVectorizer 객체) 를 받음

- train_df / train_size , val_df / val_size , test_df / test_size 을 정의

- 내부에 def sort_key(item) 메서드 존재

- 클래스 가중치를 구하기 위해서 사용

2. load_dataset_and_make_vectorizer 메서드

@classmethod

def load_dataset_and_make_vectorizer(cls, surname_csv):

# cls = SurnameDataset class 를 받음

""" 데이터셋을 로드하고 새로운 SurnameVectorizer 객체를 만듭니다

매개변수:

review_csv (str): 데이터셋의 위치

반환값:

SurnameDataset의 인스턴스

"""

surname_df = pd.read_csv(surname_csv)

train_surname_df = surname_df[surname_df.split=='train']

# SurnameVectorizer.from_data 의 반환값 : surname_vocab, nationality_vocab

return cls(surname_df, SurnameVectorizer.from_dataframe(train_surname_df))- 반환값이 SurnameDataset의 인스턴스

- 아래에서 보면 데이터셋을 새로 만들 때 해당 메서드를 사용한다.

- dataset = SurnameDataset.load_dataset_and_make_vectorizer(train_df)

- 해당 반환값은 surname_vocab / nationality_vocab 이므로 데이터셋이 만들어지는 것이다.

- 그래서 SurnameDataset의 인스턴스라고 한 것이다.

3. load_dataset_and_load_vectorizer 메서드

@classmethod

def load_dataset_and_load_vectorizer(cls, surname_csv, vectorizer_filepath):

"""데이터셋을 로드하고 새로운 SurnameVectorizer 객체를 만듭니다.

캐시된 SurnameVectorizer 객체를 재사용할 때 사용합니다.

매개변수:

surname_csv (str): 데이터셋의 위치

vectorizer_filepath (str): SurnameVectorizer 객체의 저장 위치

반환값:

SurnameDataset의 인스턴스

"""

surname_df = pd.read_csv(surname_csv)

# load_vectorizer_only 메서드 : 파일에서 surnamevectorizer 객체를 로드하는 메서드

# 반환값이 SurnameVectorizer.from_serializable(json.load(fp))

# SurnameVectorizer.from_serializable : surnameVectorizer 의 인스턴스를 반환

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

# 결국 vectorizer 가 Dataset의 인스턴스가 되어 반환됨.

return cls(surname_df, vectorizer)- vectorizer_filepath를 받아서 SurnameVectorizer 에서 surname_vocab / nationality_vocab 이 되어 SurnameVectorizer 의 인스턴스가 되고 마지막 return 단계에서 결국 SurnameDataset 의 인스턴스로 반환된다. ( cls가 SurnameDataset을 의미)

4. load_vectorizer_only / save_vectoriezer 메서드

- load_vectorizer_only 는 파일에서 vectoriezer 객체를 로드하는 메서드로, surname_vocab / nationality_vocab을 정의하는 객체라고 생각

- save_vectorier 은 파일에서 직렬화된 dict로 vocab을 만들어주는 메서드라고 이해하면 됨

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

"""파일에서 SurnameVectorizer 객체를 로드하는 정적 메서드

매개변수:

vectorizer_filepath (str): 직렬화된 SurnameVectorizer 객체의 위치

반환값:

SurnameVectorizer의 인스턴스

"""

with open(vectorizer_filepath) as fp:

# from_serializable : filepath에서 vocab을 받아와서 vocab으로 만들어주면서 vectorizer 의 인스턴스로 반환

# from_serializable는 직렬화된 것들을 vocab으로 반환하는 메서드라고 이해하면 됨

# 반환 : surname_vocab / nationality_vocab

return SurnameVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

""" SurnameVectorizer 객체를 json 형태로 디스크에 저장합니다

매개변수:

vectorizer_filepath (str): SurnameVectorizer 객체의 저장 위치

"""

with open(vectorizer_filepath, "w") as fp:

# to_serializable : 직렬화된 dic으로 vocab을 만들어주는 메서드라고 이해하면 됨

json.dump(self._vectorizer.to_serializable(), fp)

5. get_vectorizer / set_split

def get_vectorizer(self):

""" 벡터 변환 객체를 반환합니다 """

return self._vectorizer

def set_split(self, split="train"):

""" 데이터프레임에 있는 열을 사용해 분할 세트를 선택합니다

매개변수:

split (str): "train", "val", "test" 중 하나

"""

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

6. __len__ / __getitem__

- Dataset의 기본 구성 ★

- __getitem__ : 벡터로 바꾼 성씨와 국적에 해당하는 인덱스르 반환해준다.

def __len__(self):

return self._target_size

def __getitem__(self, index):

""" 파이토치 데이터셋의 주요 진입 메서드

매개변수:

index (int): 데이터 포인트의 인덱스

반환값:

데이터 포인트의 특성(x_surname)과 레이블(y_nationality)로 이루어진 딕셔너리

"""

row = self._target_df.iloc[index]

surname_vector = \

self._vectorizer.vectorize(row.surname)

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_surname': surname_vector,

'y_nationality': nationality_index}

7. get_num_batches 메서드

def get_num_batches(self, batch_size):

""" 배치 크기가 주어지면 데이터셋으로 만들 수 있는 배치 개수를 반환합니다

매개변수:

batch_size (int)

반환값:

배치 개수

"""

return len(self) // batch_size

▼ SurnameDataset 전체 코드 보기

class SurnameDataset(Dataset):

def __init__(self, surname_df, vectorizer):

"""

매개변수:

surname_df (pandas.DataFrame): 데이터셋

vectorizer (SurnameVectorizer): SurnameVectorizer 객체

"""

self.surname_df = surname_df

self._vectorizer = vectorizer

self.train_df = self.surname_df[self.surname_df.split=='train']

self.train_size = len(self.train_df)

self.val_df = self.surname_df[self.surname_df.split=='val']

self.validation_size = len(self.val_df)

self.test_df = self.surname_df[self.surname_df.split=='test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

# 클래스 가중치 구하기

# surnaem_df의 nationality 갯수를 dict 형태로 넣어줌

class_counts = surname_df.nationality.value_counts().to_dict()

def sort_key(item):

# _vectorizer = SurnameVectorizer 객체를 의미함

# nationality_vocab = 국적을 정수에 매핑하는 Vocabulary 객체 (class SurnameVectorizer)

# look_up_token = 토큰에 대응하는 인덱스를 추출하는 메서드 (class vocabulary)

return self._vectorizer.nationality_vocab.lookup_token(item[0])

# 인덱스를 key로 지정해서 dic에서 쌍으로 꺼내와서 정렬

sorted_counts = sorted(class_counts.items(), key=sort_key)

# 빈도수를 기준으로 클래스의 가중치 구하기

frequencies = [count for _, count in sorted_counts]

self.class_weights = 1.0 / torch.tensor(frequencies, dtype=torch.float32)

@classmethod

def load_dataset_and_make_vectorizer(cls, surname_csv):

# cls = SurnameDataset class 를 받음

""" 데이터셋을 로드하고 새로운 SurnameVectorizer 객체를 만듭니다

매개변수:

review_csv (str): 데이터셋의 위치

반환값:

SurnameDataset의 인스턴스

"""

surname_df = pd.read_csv(surname_csv)

train_surname_df = surname_df[surname_df.split=='train']

# SurnameVectorizer.from_data 의 반환값 : surname_vocab, nationality_vocab

# 아래에서 보면 데이터셋을 새로 만들때 해당 메서드를 사용하는데,

# dataset = surnameDataset.load_dataset_and_make_vectorizer(train) 이렇게 함으로써

# surname_vocab, nationality vocab이 생성되는 거니까 데이터셋이 만들어지는 거임. 즉 dataset의 인스턴스

return cls(surname_df, SurnameVectorizer.from_dataframe(train_surname_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, surname_csv, vectorizer_filepath):

"""데이터셋을 로드하고 새로운 SurnameVectorizer 객체를 만듭니다.

캐시된 SurnameVectorizer 객체를 재사용할 때 사용합니다.

매개변수:

surname_csv (str): 데이터셋의 위치

vectorizer_filepath (str): SurnameVectorizer 객체의 저장 위치

반환값:

SurnameDataset의 인스턴스

"""

surname_df = pd.read_csv(surname_csv)

# load_vectorizer_only 메서드 : 파일에서 surnamevectorizer 객체를 로드하는 메서드

# 반환값이 SurnameVectorizer.from_serializable(json.load(fp))

# SurnameVectorizer.from_serializable : surnameVectorizer 의 인스턴스를 반환

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

# 결국 vectorizer 가 Dataset의 인스턴스가 되어 반환됨.

return cls(surname_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

"""파일에서 SurnameVectorizer 객체를 로드하는 정적 메서드

매개변수:

vectorizer_filepath (str): 직렬화된 SurnameVectorizer 객체의 위치

반환값:

SurnameVectorizer의 인스턴스

"""

with open(vectorizer_filepath) as fp:

# from_serializable : filepath에서 vocab을 받아와서 vocab으로 만들어주면서 vectorizer 의 인스턴스로 반환

# from_serializable는 직렬화된 것들을 vocab으로 반환하는 메서드라고 이해하면 됨

# 반환 : surname_vocab / nationality_vocab

return SurnameVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

""" SurnameVectorizer 객체를 json 형태로 디스크에 저장합니다

매개변수:

vectorizer_filepath (str): SurnameVectorizer 객체의 저장 위치

"""

with open(vectorizer_filepath, "w") as fp:

# to_serializable : 직렬화된 dic으로 vocab을 만들어주는 메서드라고 이해하면 됨

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

""" 벡터 변환 객체를 반환합니다 """

return self._vectorizer

def set_split(self, split="train"):

""" 데이터프레임에 있는 열을 사용해 분할 세트를 선택합니다

매개변수:

split (str): "train", "val", "test" 중 하나

"""

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

""" 파이토치 데이터셋의 주요 진입 메서드

매개변수:

index (int): 데이터 포인트의 인덱스

반환값:

데이터 포인트의 특성(x_surname)과 레이블(y_nationality)로 이루어진 딕셔너리

"""

row = self._target_df.iloc[index]

surname_vector = \

self._vectorizer.vectorize(row.surname)

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_surname': surname_vector,

'y_nationality': nationality_index}

def get_num_batches(self, batch_size):

""" 배치 크기가 주어지면 데이터셋으로 만들 수 있는 배치 개수를 반환합니다

매개변수:

batch_size (int)

반환값:

배치 개수

"""

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True,

drop_last=True, device="cpu"):

"""

파이토치 DataLoader를 감싸고 있는 제너레이터 함수.

걱 텐서를 지정된 장치로 이동합니다.

"""

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict

<NEXT>

이번 포스팅에서는 성씨 데이터셋을 파이토치로 사용하기위해 custom Dataset 클래스를 만든 코드를 살펴보았다. 다음 포스팅에서는 해당 custom Dataset에서 필요로 하는 아래 두 개의 클래스를 다뤄보겠다.

- Vocabulary class : 단어를 해당 정수로 매핑하는데 사용하는 클래스

- Vectorizer class : vocabulary를 적용하여 성씨 문자열을 벡터로 바꾸는 클래스

'AI study > 자연어 처리 (NLP)' 카테고리의 다른 글

| [NLP] Pytorch를 활용하여 CBOW 임베딩 학습하기 (1)데이터셋 생성 (0) | 2021.07.29 |

|---|---|

| [NLP] 단어 임베딩 (Embedding) (2) | 2021.07.27 |

| [NLP] 레스토랑 리뷰 감성 분류하기 (3) (feat.파이토치로 배우는 자연어 처리) - 훈련 및 평가, 추론, 분석 (2) | 2021.07.22 |

| [NLP] 레스토랑 리뷰 감성 분류하기 (2) (feat.파이토치로 배우는 자연어 처리) - 데이터 처리를 위한 클래스 살펴보기 (0) | 2021.07.22 |

| [NLP] 레스토랑 리뷰 감성 분류하기 (1) (feat.파이토치로 배우는 자연어 처리) (0) | 2021.07.20 |